45 soft labels deep learning

PDF Deep Learning using Support Vector Machines cation tasks, much of these \deep learning" models employ the softmax activation func-tions to learn output labels in 1-of-K for-mat. In this paper, we demonstrate a small but consistent advantage of replacing soft-max layer with a linear support vector ma-chine. Learning minimizes a margin-based loss instead of the cross-entropy loss. In al- Loss and Loss Functions for Training Deep Learning Neural Networks Almost universally, deep learning neural networks are trained under the framework of maximum likelihood using cross-entropy as the loss function. Most modern neural networks are trained using maximum likelihood. This means that the cost function is […] described as the cross-entropy between the training data and the model distribution.

MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels Real-world datasets commonly have noisy labels, which negatively affects the performance of deep neural networks (DNNs). In order to address this problem, we propose a label noise robust learning algorithm, in which the base classifier is trained on soft-labels that are produced according to a meta-objective. In each iteration, before conventional training, the meta-objective reshapes the loss ...

Soft labels deep learning

(PDF) Deep learning with noisy labels: Exploring techniques and ... that have trained deep learning models on medical image. datasets with noisy labels. Section V contains our experimental. results with three medical image datasets, where we investigate. the ... Deep learning with noisy labels: Exploring techniques and remedies in ... Once this smooth label is obtained, the deep learning model is trained by minimizing the Kullback-Leibler (KL) divergence between the model output and the smooth noisy label. Label smoothing is a well-know trick for improving the test performance of deep learning models ( Szegedy, Vanhoucke, Ioffe, Shlens, Wojna, 2016 , Müller, Kornblith ... How to Develop Voting Ensembles With Python - Machine Learning Mastery Voting is an ensemble machine learning algorithm. For regression, a voting ensemble involves making a prediction that is the average of multiple other regression models. In classification, a hard voting ensemble involves summing the votes for crisp class labels from other models and predicting the class with the most votes. A soft voting ensemble involves summing the predicted probabilities ...

Soft labels deep learning. Meta Soft Label Generation for Noisy Labels - arxiv-vanity.com The existence of noisy labels in the dataset causes significant performance degradation for deep neural networks (DNNs). To address this problem, we propose a Meta Soft Label Generation algorithm called MSLG, which can jointly generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion. Our approach adapts the meta-learning paradigm to estimate optimal ... List of Deep Learning Layers - MATLAB & Simulink - MathWorks crop2dLayer. A 2-D crop layer applies 2-D cropping to the input. crop3dLayer. A 3-D crop layer crops a 3-D volume to the size of the input feature map. scalingLayer (Reinforcement Learning Toolbox) A scaling layer linearly scales and biases an input array U, giving an output Y = Scale.*U + Bias. How to make use of "soft" labels in binary classification - Quora Answer: If you're in possession of soft labels then you're in luck, because you have more information about the ground truth that you would from binary labels alone: you have the true class and its degree. For one, you're entitled to ignore the soft information and treat the problem as a bog-sta... Deep Learning with Label Noise / Noisy Labels - GitHub Deep Learning with Label Noise / Noisy Labels. This repo consists of collection of papers and repos on the topic of deep learning by noisy labels. All methods listed below are briefly explained in the paper Image Classification with Deep Learning in the Presence of Noisy Labels: A Survey. More information about the topic can also be found on ...

Artificial Intelligence, Machine learning, and Deep Learning The revolution of Industrial 4.0 has brought technologies such as Artificial Intelligence, Machine learning, Deep Learning to become buzzwords in multiple industries. The overuse of these wor ... as in the above definition of machine learning, is structured data. We can label the pictures of dogs and cats in a way that will define specific ... How to Develop an Ensemble of Deep Learning Models in Keras Aug 28, 2020 · Deep learning neural network models are highly flexible nonlinear algorithms capable of learning a near infinite number of mapping functions. A frustration with this flexibility is the high variance in a final model. The same neural network model trained on the same dataset may find one of many different possible “good enough” solutions each time […] How to map softMax output to labels in MXNet - Stack Overflow In Deep learning the predictions are often encoded using one hot vector. I am using MXNet for creating a simple Neural Network which classifies images of animals as cats,dogs,horses etc. ... The softmax output only gives an array without any mapping of the results with the corresponding label. neural-network mxnet. Share. Follow asked Mar 2 ... DeepNotes | Deep Learning Demystified We have to note that the numerical range of floating point numbers in numpy is limited. For float64 the upper bound is \(10^{308}\). For exponential, its not difficult to overshoot that limit, in which case python returns nan.. To make our softmax function numerically stable, we simply normalize the values in the vector, by multiplying the numerator and denominator with a constant \(C\).

A review of deep learning methods for semantic ... - ScienceDirect May 01, 2021 · A review of deep learning methods for semantic segmentation of remote sensing imagery ... the limited non-conventional remote sensing data sets with labels is an obstacle to developing and evaluating new deep learning methods. Previous article in issue; Next article ... Applied Soft Computing, 70 (2018), pp. 41-65. Article Download PDF View ... PDF Unsupervised Person Re-Identification by Soft Multilabel Learning in the absence of pairwise labels across disjoint camera views. To overcome this problem, we propose a deep model for the soft multilabel learning for unsupervised RE-ID. The idea is to learn a soft multilabel (real-valued label likeli-hood vector) for each unlabeled person by comparing the unlabeled person with a set of known reference persons ... Image classification with deep learning in the presence of noisy labels ... Afterward, the teacher predicts soft labels for noisy data, and the student is again trained on these soft labels for fine-tuning. 3.2.3. Using data with just noisy labels. ... By effectively dealing with noisy labels, deep learning algorithms can be fed with massive datasets. Moreover, there are research opportunities for alternative usage of ... PDF A Multi-label Multimodal Deep Learning Framework for Imbalanced Data ... labels. Deep learning has brought unprecedented advances in natural language processing, computer vision, and speech processing [3], [4], [5]. In particular, multimodal deep learn- ... of words with a soft constraint: T i j+ b + b = log(X ij) where X ij is the word pair iand j, iand j are the word vectors for words iand j, b iand b j are the ...

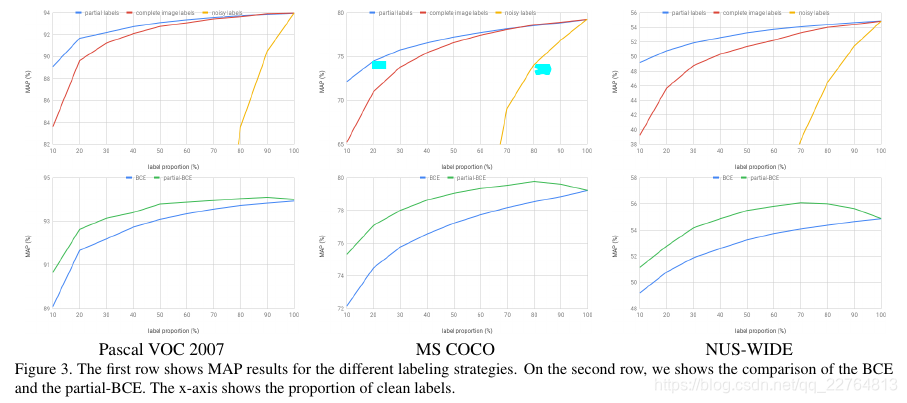

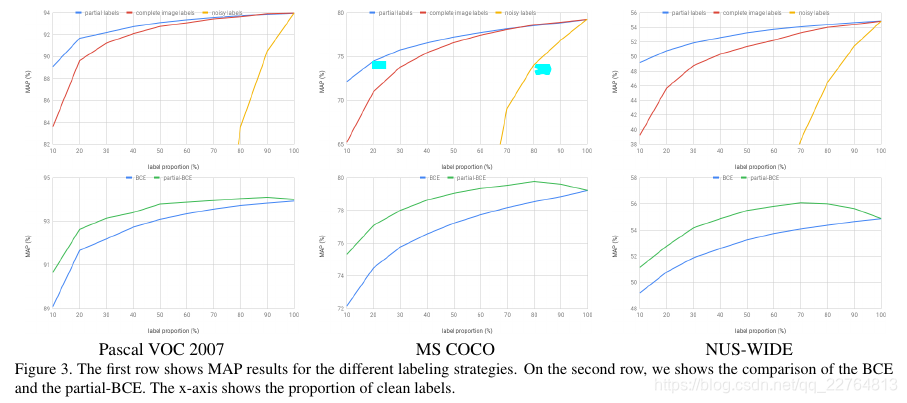

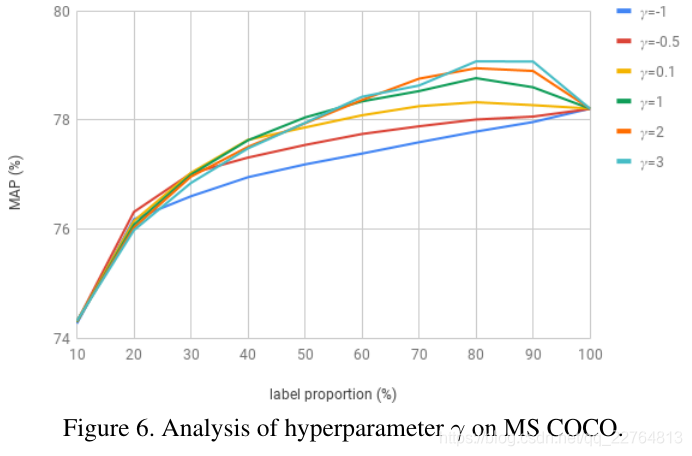

【multi-label】Learning a Deep ConvNet for Multi-label Classification with Partial Labels_猫猫与橙子的博客 ...

transferlearning/awesome_paper.md at master - GitHub May 24, 2022 · 20210202 ICLR-21 Rethinking Soft Labels for Knowledge Distillation: A Bias-Variance Tradeoff Perspective. Rethink soft labels for KD in a bias-variance tradeoff perspective; ... Domain adaptation using deep learning with cross-grafted stacks; 用跨领域嫁接栈进 …

【multi-label】Learning a Deep ConvNet for Multi-label Classification with Partial Labels_猫猫与橙子的博客 ...

PDF Pseudo Labels and Soft Multi-part Corresponding Similarity for ... In addition, a novel loss function is proposed to support learning with pseudo labels and soft multi- ... differences between deep learning features [19], [20], [22], [33]. However, without label ...

Chapter 20 K-means Clustering | Hands-On Machine Learning … A Machine Learning Algorithmic Deep Dive Using R. 20.3 Defining clusters. The basic idea behind k-means clustering is constructing clusters so that the total within-cluster variation is minimized. There are several k-means algorithms available for doing this.The standard algorithm is the Hartigan-Wong algorithm (Hartigan and Wong 1979), which defines the total within …

Softmax Classifiers Explained - PyImageSearch Last week, we discussed Multi-class SVM loss; specifically, the hinge loss and squared hinge loss functions.. A loss function, in the context of Machine Learning and Deep Learning, allows us to quantify how "good" or "bad" a given classification function (also called a "scoring function") is at correctly classifying data points in our dataset.

How to Create More Efficient Deep Learning Models These types of optimizations are becoming even more important now as the new improvements in deep learning models also bring an increase in the number of parameters, resources requirements to train, latency, storage requirements, etc. ... We can also use the teacher network to create soft labels that we can use in the loss function alongside ...

Unable to locate the downloaded datasets in Google Colab - Part 1 (2019) - Deep Learning Course ...

PDF MixNN: Combating Noisy Labels in Deep Learning by Mixing with Nearest ... During a "early learning" phase, deep neural networks were ob-served to fit the clean samples before memorizing the mislabeled samples. In this paper, we dig deeper into the representation ... [24] iteratively updates the labels with soft or hard pseudo-labels. PENCIL [26] refines the relabel procedure without using prior information ...

Validation of Soft Labels in Developing Deep Learning Algori... : The ... Validation of Soft Labels in Developing Deep Learning Algorithms for Detecting Lesions of Myopic Maculopathy From Optical Coherence Tomographic Images. Du, Ran MD *; ... The AUC values of models trained by soft labels in MNV, MTM, and DSM models were 0.985, 0.946, and 0.978; and the AUPR values were 0.908, 0.876, and 0.653 respectively. ...

machine learning - What is the difference between a feature and a label ... Briefly, feature is input; label is output. This applies to both classification and regression problems. A feature is one column of the data in your input set. For instance, if you're trying to predict the type of pet someone will choose, your input features might include age, home region, family income, etc. The label is the final choice, such ...

Deep learning and process understanding for data-driven … Feb 13, 2019 · In short, the similarities between the types of data addressed with classical deep learning applications and geoscientific data make a compelling argument for the integration of deep learning into ...

Label smoothing with Keras, TensorFlow, and Deep Learning Figure 1: Label smoothing with Keras, TensorFlow, and Deep Learning is a regularization technique with a goal of enabling your model to generalize to new data better. This digit is clearly a "7", and if we were to write out the one-hot encoded label vector for this data point it would look like the following: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0]

Label-Free Quantification You Can Count On: A Deep Learning Experiment Although it shows excellent correspondence between the two methods, the total number of objects detected with deep learning was around 3% higher. Figure 2: Nuclei detected using fluorescence (left), the corresponding brightfield image (middle), and object shape predicted by deep learning technology (right).

Deciphering interaction fingerprints from protein molecular Dec 09, 2019 · A trio of protein surface patches with the labels, binder, target and random patches were fed into the MaSIF-search network ... Geometric deep …

Adversarial Attacks and Defenses in Deep Learning Mar 01, 2020 · 1. Introduction. A trillion-fold increase in computation power has popularized the usage of deep learning (DL) for handling a variety of machine learning (ML) tasks, such as image classification , natural language processing , and game theory .However, a severe security threat to the existing DL algorithms has been discovered by the research community: …

GitHub - subeeshvasu/Awesome-Learning-with-Label-Noise: A curated list ... 2014 - Learning from Noisy Labels with Deep Neural Networks. 2015-ICLR_W - Training Convolutional Networks with Noisy Labels. 2015-CVPR - Learning from Massive Noisy Labeled Data for Image Classification. 2015-CVPR - Visual recognition by learning from web data: A weakly supervised domain generalization approach. ... 2020-ICPR - Meta Soft Label ...

Understanding Deep Learning on Controlled Noisy Labels In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ...

An Introduction to Confident Learning: Finding and Learning with Label ... cleanlab is a framework for machine learning and deep learning with label errors like how PyTorch is a framework for deep learning. ... ImageNet train set identified using confident learning. Label Errors are boxed in red. ... et al. (2013); van Rooyen et al. (2015); Patrini et al. (2017), using soft-pruning via loss-reweighting, to avoid the ...

Post a Comment for "45 soft labels deep learning"